Scaling Requirements Quality Across Global Teams

A practical guide for building consistency, adoption, and proof of value

Scaling requirements quality across global teams is not about fixing individual documents. It requires a structured approach that ensures consistency, efficiency, and adoption across divisions and tools.

This guide outlines five practical pillars: starting with the right pilot, centralizing rules, measuring progress, balancing automation with human expertise, and supporting adoption through change management.

Together, these steps help leaders move from isolated improvements to enterprise-wide clarity that is measurable, sustainable, and credible.

Introduction: The Challenge of Scale

Improving the quality of requirements is not difficult in isolation. A motivated engineer can apply a checklist, follow a guide, or adjust their language to reduce ambiguity. The challenge comes when these practices must scale across entire organizations.

Teams author requirements in Word and Excel, as well as platforms like DOORS and Jama. Different business units often maintain their own habits, templates, and interpretations of clarity. This creates a rollout challenge: how to apply consistent quality standards across tens of thousands of requirements and hundreds of engineers without creating overhead that slows delivery.

The obstacle is not a lack of commitment but variation. As one systems engineering leader noted, teams perform better when they share fundamentals such as singular statements, testable outcomes, and clear acceptance criteria. Without this common foundation, quality becomes subjective and difficult to scale.

This guide outlines a strategy for scaling requirements quality across the enterprise. It is built around five pillars: start with the right pilot, centralize rules, measure consistently, balance automation with human expertise, and support adoption through change management.

Step 1: Start with the Right Pilot

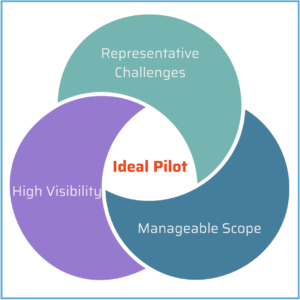

Scaling always begins with a focused pilot. The goal is not simply to test tools or rules, but to establish proof of value in a way that builds momentum.

How to choose the right pilot:

- Select a project with visibility. When executives see results, support grows.

- Make sure the team represents common challenges such as fragmented authoring styles, mixed tools, or high compliance pressure.

- Keep the scope manageable. A pilot that is too broad overwhelms reviewers, while one that is too narrow feels irrelevant.

What to measure in a pilot:

- Baseline requirement quality: How many statements are ambiguous, incomplete, or unverifiable?

- Review effort: How many hours are consumed by manual inspection?

- Improvement after intervention: What percentage of requirements meet standards after applying structured support?

Industry insight: Pilots focused on compliance-heavy specifications or projects with diverse stakeholders often provide the clearest proof of value. These documents usually reveal a high baseline of ambiguous or unverifiable requirements, which makes improvements more visible and persuasive. Pilots that are too small or narrowly scoped may show good results but fail to generate organizational momentum.

Supporting evidence from the field

The JIP33 initiative from IOGP demonstrated the value of aligned expectations. Once contributors adopted consistent wording and clarity rules, ambiguity dropped, and review cycles accelerated. This example shows how early alignment can create results that scale.

Step 2: Centralize Rules

A rollout cannot succeed if every division defines quality differently. Consistency requires centralization.

What centralization looks like:

- A single rule set, grounded in accepted standards like INCOSE, but adapted to organizational context.

- Consistent checks that can be applied across requirements environments.

- Clear guidance for authors that goes beyond yes or no validation and provides examples and rationale.

Why it matters:

Without centralized standards, adoption stalls. One team’s “good” requirement looks different from another’s. Executives cannot measure fidelity. Engineers lose trust when rules shift depending on the tool.

Industry insight: INCOSE’s Guide for Writing Requirements emphasizes the importance of applying rules consistently across an organization. In practice, global teams often struggle when one division defines “verifiable” or “unambiguous” differently from another. This lack of alignment slows integration and creates rework. Centralization provides a common foundation while still allowing gradual refinement.

Step 3: Measure Consistently and Track Progress Over Time

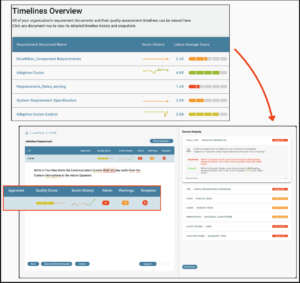

Progress cannot be proven without measurement. Leaders who want to scale adoption need clear, repeatable metrics.

What to measure:

- Percentage of requirements meeting defined thresholds.

- Reduction in high-risk terms and incomplete statements.

- Review hours saved compared to baseline.

- Trends over time across projects and cycles.

Why it matters:

Executives need evidence of improvement. Engineers need to see their efforts making a difference. Auditors need objective proof. Without measurement, requirements quality becomes an abstract ideal rather than a visible outcome.

How to present results:

- Before-and-after comparisons: Show the percentage of unclear or unverifiable requirements at the start of a pilot versus after interventions.

- Dashboards: Summarize quality metrics across projects or teams to highlight adoption progress.

- Trend lines: Demonstrate gradual improvement across multiple review cycles or releases.

Industry insight: Consistency in metrics is what builds credibility. Executives are most persuaded by evidence of hours saved, risk reduced, or costs avoided. Engineers are motivated by seeing fewer clarification requests, smoother reviews, and documents that require less rework. Both views are necessary to sustain adoption.

Step 4: Balance Automation and Human Expertise

Automation alone cannot solve requirements quality. Context, judgment, and domain expertise remain essential. At the same time, human-only review cannot scale.

The role of automation:

- Flagging ambiguous terms, incomplete statements, or structural issues.

- Ensuring consistency across thousands of requirements.

- Providing immediate suggestions and guidance at the point of writing.

The role of humans:

- Interpreting context and intent.

- Making trade-offs between competing constraints.

- Communicating across disciplines and stakeholders.

The balance:

Automation should handle repetitive, objective checks. Humans should focus on nuance, intent, and system-level reasoning. Together, they create a process that is efficient, scalable, and credible.

Industry insight: Automated checks are effective at surfacing common patterns of ambiguity or incompleteness. Human reviewers remain essential for determining whether a requirement communicates the correct intent in its domain. Adoption improves when automation is positioned as supportive of engineers rather than as a replacement for their judgment.

Step 5: Plan for Change Management

Even the best technical rollout can fail if adoption is not supported. Engineers are cautious about new processes, especially if they perceive them as adding overhead.

What helps adoption:

- Training sessions that emphasize how quality standards make authoring easier, not harder.

- Communication that shows early wins from pilots.

- Feedback loops that allow engineers to suggest improvements.

Why it matters:

Adoption challenges are not unique to requirements quality. Any new standard or process risks being seen as bureaucracy unless value is demonstrated early. When leaders highlight tangible benefits such as reduced clarification requests, smoother compliance preparation, or faster reviews, engineers are more likely to embrace the change.

Scaling requirements quality is as much about culture as it is about tools. Leaders must guide both.

Common Pitfalls to Avoid

- Pilots without baselines: Without a starting point, improvement cannot be measured.

- Too much complexity too soon: Overloading pilots with rules discourages adoption.

- Different rules for different tools: Inconsistency undermines credibility.

- Over-reliance on automation: Context and nuance are lost.

- Neglecting communication: Engineers need to see value, not just rules.

Why Scaling Fails Without Structure

Scaling requirements quality is not about effort alone. Many organizations attempt broad rollouts without a clear framework and struggle with stalled adoption, inconsistent standards, and a lack of measurable progress. By contrast, those who start small, centralize rules, and measure consistently build the credibility needed to expand successfully.

Conclusion: Scaling with Confidence

Scaling requirements quality is not about applying more effort. It is about designing systems that make clarity sustainable across thousands of requirements and hundreds of engineers.

The strategy is simple but powerful:

- Start small with the right pilot.

- Centralize rules.

- Measure consistently and show progress.

- Balance automation with human expertise.

- Support adoption through change management.

When organizations follow this path, they move beyond isolated improvements to enterprise-wide consistency. Executives gain proof of value. Engineers spend less time in review and more time innovating. Auditors see objective evidence.

Scaling clarity is not just possible. It is practical, measurable, and transformative.